The multidistrict litigation (MDL 3047) has grown to 1,867 cases as of July 2025, consolidating claims against Instagram and other social media apps under Judge Yvonne Gonzalez Rogers in the Northern District of California.

These social media addiction lawsuits allege Instagram’s algorithms intentionally foster addiction and psychological harm, particularly among users who were minors, with claims ranging from depression to wrongful death.

As the litigation advances toward bellwether trials in October 2025, families nationwide are joining forces to hold Meta accountable for prioritizing profits over user safety.

The Social Media Addiction MDL (MDL 3047)

The current status of MDL 3047 reveals 1,867 pending cases as of July 2025, representing both personal injury claims from families and institutional cases from school districts seeking compensation for increased mental health service costs.

This consolidation combines individual tragedy with systemic harm, creating one of the largest social media litigation efforts in U.S. history.

The MDL structure allows plaintiffs to share discovery resources and coordinate legal strategies while maintaining their individual claims for damages.

Judge Rogers’ oversight is important in advancing these cases, particularly her October 2024 ruling that allowed negligence claims to proceed and rejected tech companies’ traditional Section 230 immunity arguments.

Allegations Against Instagram’s Algorithm Design

Instagram’s allegedly addictive features form the core of plaintiffs’ claims, with internal Meta documents revealing the company knowingly deployed infinite scroll, autoplay videos, and strategically-timed push notifications designed to increase user engagement time.

These features work together to create what experts describe as a “dopamine slot machine,” exploiting teenagers’ developing brains and natural vulnerability to social validation.

The algorithms specifically target users showing signs of mental health struggles, pushing increasingly harmful content to keep them engaged and draw vulnerable teens deeper into the platform.

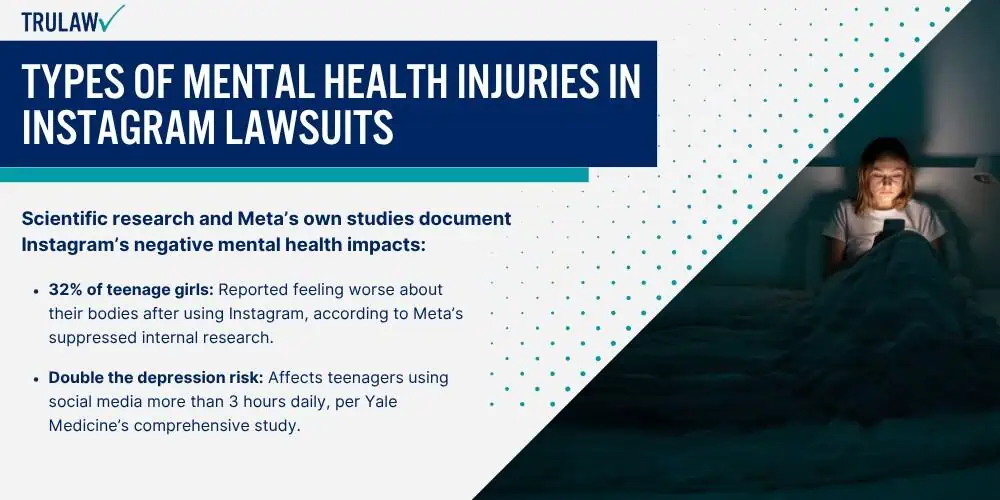

Internal Meta documents revealed through discovery demonstrate the company’s awareness of Instagram’s harmful effects:

- The “Facebook Papers”: Revealed Instagram worsens body image issues for 1 in 3 teenage girls, yet executives continued prioritizing engagement metrics.

- Internal addiction research: Confirmed Instagram’s infinite scroll and notification features create addictive behaviors mirroring substance dependency in adolescent users.

- Employee whistleblower warnings: About teen mental health impacts were systematically ignored by leadership pursuing growth targets.

- User experience studies: Documented teenagers self-reporting feeling “addicted” and unable to control their Instagram usage patterns.

- “Tween targeting” evidence: Exposed deliberate strategies to attract users ages 10-12 despite official policies requiring users be 13 or older.

Meta’s internal research documented in the Facebook Papers shows Instagram worsens mental health for 1 in 3 teen girls, yet the company continued refining engagement features while publicly denying causation.

Whistleblower Frances Haugen’s testimony before Congress revealed executives repeatedly chose profits over safety, with one internal document showing an Instagram employee’s test account as a 13-year-old was quickly directed to pro-anorexia content.

These revelations demonstrate Meta not only knew about Instagram’s harm but actively designed features to exploit teen psychology for profit.

Legal Theories Supporting Instagram Claims

The primary legal theories center on product liability for defective design and negligence in implementing safeguards, with plaintiffs arguing Instagram’s algorithm constitutes an unreasonably dangerous product when used by minors.

Unlike traditional Section 230 cases involving third-party content, these lawsuits target Instagram’s own design choices and algorithmic recommendations.

Courts have recognized this distinction, allowing claims to proceed on the theory that Meta’s conduct goes beyond merely hosting content to actively creating user addiction.

Plaintiffs pursue multiple legal theories against Instagram’s parent company, each targeting different aspects of Instagram’s harmful design:

- Strict product liability: For creating an algorithmically-driven platform that functions as a defective product when used by minors

- Failure to warn: Parents and users about documented addiction risks and mental health dangers Meta knew through internal research

- Fraudulent concealment: Of research showing Instagram’s negative impacts while publicly claiming the platform was safe for teens

- Negligent age verification: Systems that Meta knew were ineffective at preventing underage users from accessing harmful content

- State consumer protection violations: For deceptive marketing practices targeting minors with addictive features, violating FTC consumer protection guidelines

- Negligent infliction of emotional distress: Through algorithms designed to exploit psychological vulnerabilities in developing minds

The court’s rejection of Meta’s Section 230 immunity defense has fundamentally strengthened plaintiffs’ positions, establishing that social media companies can be held liable for their own algorithmic designs rather than hiding behind protections meant for neutral platforms.

Judge Rogers specifically noted that negligence law provides a “flexible mechanism to redress evolving means for causing harm,” recognizing that traditional legal theories can adapt to address modern technological dangers.

This precedent-setting approach opens the door for accountability that has long eluded social media victims.

If you or a loved one experienced depression, anxiety, eating disorders, self-harm, or other mental health injuries related to Instagram use, you may be eligible to seek compensation.

Contact TruLaw using the chat on this page to receive an instant case evaluation and determine whether you qualify to join others in filing an Instagram Lawsuit today.